mirror of

https://github.com/TeamWiseFlow/wiseflow.git

synced 2025-01-23 10:50:25 +08:00

new llm crawler

This commit is contained in:

parent

b683073fde

commit

23b7f76d9e

118

README.md

118

README.md

@ -1,34 +1,92 @@

|

|||||||

# 首席情报官

|

# 📈 首席情报官(Wiseflow)

|

||||||

|

|

||||||

**欢迎使用首席情报官**

|

**首席情报官**(Wiseflow)是一个敏捷的信息挖掘工具,可以从社交平台消息、微信公众号、群聊等各种信息源中提炼简洁的讯息,自动做标签归类并上传数据库。让你轻松应对信息过载,精准掌握你最关心的内容。

|

||||||

|

|

||||||

首席情报官(wiseflow)是一个完备的领域(行业)信息情报采集与分析系统,该系统面向用户开源免费,同时我们提供更加专业的行业情报信息订阅服务【支持社交网络平台信息获取】,欢迎联系我们获取更多信息。

|

## 🌟 功能特色

|

||||||

|

|

||||||

Email:35252986@qq.com

|

- 🚀 **原生 LLM 应用**

|

||||||

|

我们精心选择了最适合的 7B~9B 开源模型,最大化降低使用成本,且利于数据敏感用户随时切换到本地部署模式。

|

||||||

|

|

||||||

**首席情报官目前版本主要功能点:**

|

- 🌱 **轻量化设计**

|

||||||

|

没有使用任何向量模型,系统开销很小,无需 GPU,适合任何硬件环境。

|

||||||

|

|

||||||

|

- 🗃️ **智能信息提取和分类**

|

||||||

|

从各种信息源中自动提取信息,并根据用户关注点进行标签化和分类管理。

|

||||||

|

|

||||||

|

- 🌍 **实时动态知识库**

|

||||||

|

能够与现有的 RAG 类项目整合,作为动态知识库提升知识管理效率。

|

||||||

|

|

||||||

|

- 📦 **流行的 Pocketbase 数据库**

|

||||||

|

数据库和界面使用 Pocketbase,不管是直接用 Web 阅读,还是通过 Go 工具读取,都很方便。

|

||||||

|

|

||||||

|

## 🔄 对比分析

|

||||||

|

|

||||||

|

| 特点 | 首席情报官(Wiseflow) | Markdown_crawler | firecrawler | RAG 类项目 |

|

||||||

|

| -------------- | ----------------------- | ----------------- | ----------- | ---------------- |

|

||||||

|

| **信息提取** | ✅ 高效 | ❌ 限制于 Markdown | ❌ 仅网页 | ⚠️ 提取后处理 |

|

||||||

|

| **信息分类** | ✅ 自动 | ❌ 手动 | ❌ 手动 | ⚠️ 依赖外部工具 |

|

||||||

|

| **模型依赖** | ✅ 7B~9B 开源模型 | ❌ 无模型 | ❌ 无模型 | ✅ 向量模型 |

|

||||||

|

| **硬件需求** | ✅ 无需 GPU | ✅ 无需 GPU | ✅ 无需 GPU | ⚠️ 视具体实现而定 |

|

||||||

|

| **可整合性** | ✅ 动态知识库 | ❌ 低 | ❌ 低 | ✅ 高 |

|

||||||

|

|

||||||

|

## 📥 安装与使用

|

||||||

|

|

||||||

|

1. **克隆代码仓库**

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/your-username/wiseflow.git

|

||||||

|

cd wiseflow

|

||||||

|

```

|

||||||

|

|

||||||

|

2. **安装依赖**

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install -r requirements.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

3. **配置**

|

||||||

|

|

||||||

|

在 `config.yaml` 中配置你的信息源和关注点。

|

||||||

|

|

||||||

|

4. **启动服务**

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python main.py

|

||||||

|

```

|

||||||

|

|

||||||

|

5. **访问 Web 界面**

|

||||||

|

|

||||||

|

打开浏览器,访问 `http://localhost:8000`。

|

||||||

|

|

||||||

|

## 📚 文档与支持

|

||||||

|

|

||||||

|

- [使用文档](docs/usage.md)

|

||||||

|

- [开发者指南](docs/developer.md)

|

||||||

|

- [常见问题](docs/faq.md)

|

||||||

|

|

||||||

|

## 🤝 贡献指南

|

||||||

|

|

||||||

|

欢迎对项目进行贡献!请阅读 [贡献指南](CONTRIBUTING.md) 以了解详细信息。

|

||||||

|

|

||||||

|

## 🛡️ 许可协议

|

||||||

|

|

||||||

|

本项目基于 [Apach2.0](LICENSE) 开源。

|

||||||

|

|

||||||

|

商用以及定制合作,请联系 Email:35252986@qq.com

|

||||||

|

|

||||||

|

(商用客户请联系我们报备登记,产品承诺永远免费。)

|

||||||

|

|

||||||

|

(对于定制客户,我们会针对您的信源和数据情况,提供专有解析器开发、信息提取和分类策略优化、llm模型微调以及私有化部署服务)

|

||||||

|

|

||||||

|

## 📬 联系方式

|

||||||

|

|

||||||

|

有任何问题或建议,欢迎通过 [issue](https://github.com/your-username/wiseflow/issues) 与我们联系。

|

||||||

|

|

||||||

- 每日关注简报列表

|

|

||||||

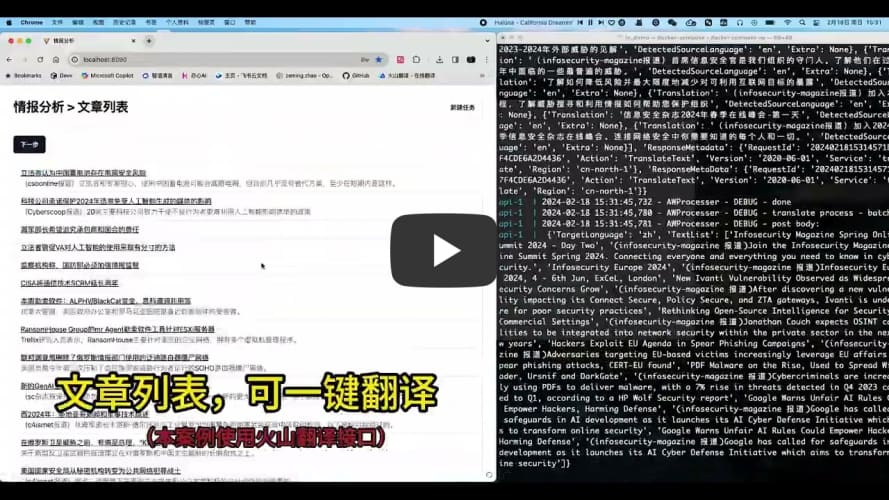

- 入库文章列表、详情,支持一键翻译(简体中文)

|

|

||||||

- 关注点一键搜索(使用搜狗引擎)

|

|

||||||

- 关注点一键报告生成(直接生成word文档)

|

|

||||||

- 行业情报信息订阅【支持指定信源,包括微信公众号、小红书等社交网络平台】(邮件35252986@qq.com联系开通)

|

|

||||||

- 数据库管理

|

|

||||||

- 支持针对特定站点的自定义爬虫集成,并提供本地定时扫描任务……

|

|

||||||

|

|

||||||

## change log

|

## change log

|

||||||

|

|

||||||

【2024.5.8】增加对openai SDK的支持,现在可以通过调用llms.openai_wrapper使用所有兼容openai SDK的大模型服务,具体见 [client/backend/llms/README.md](client/backend/llms/README.md)

|

【2024.5.8】增加对openai SDK的支持,现在可以通过调用llms.openai_wrapper使用所有兼容openai SDK的大模型服务,具体见 [client/backend/llms/README.md](client/backend/llms/README.md)

|

||||||

|

|

||||||

**产品介绍视频:**

|

|

||||||

|

|

||||||

[](https://www.bilibili.com/video/BV17F4m1w7Ed/?share_source=copy_web&vd_source=5ad458dc9dae823257e82e48e0751e25 "wiseflow repo demo")

|

|

||||||

|

|

||||||

**打不开看这里**

|

|

||||||

|

|

||||||

Youtube:https://www.youtube.com/watch?v=80KqYgE8utE&t=8s

|

|

||||||

|

|

||||||

b站:https://www.bilibili.com/video/BV17F4m1w7Ed/?share_source=copy_web&vd_source=5ad458dc9dae823257e82e48e0751e25

|

|

||||||

|

|

||||||

## getting started

|

## getting started

|

||||||

|

|

||||||

@ -41,29 +99,11 @@ git clone git@github.com:TeamWiseFlow/wiseflow.git

|

|||||||

cd wiseflow/client

|

cd wiseflow/client

|

||||||

```

|

```

|

||||||

|

|

||||||

2、申请开通火山翻译、阿里云dashscope(也支持本地LLM部署)等服务;

|

|

||||||

|

|

||||||

3、申请网易有道BCE模型(免费、开源);

|

|

||||||

|

|

||||||

4、参考 /client/env_sample 编辑.env文件;

|

4、参考 /client/env_sample 编辑.env文件;

|

||||||

|

|

||||||

5、运行 `docker compose up -d` 启动(第一次需要build image,时间较长)

|

5、运行 `docker compose up -d` 启动(第一次需要build image,时间较长)

|

||||||

|

|

||||||

|

|

||||||

详情参考 [client/README.md](client/README.md)

|

|

||||||

|

|

||||||

## SDK & API (coming soon)

|

|

||||||

|

|

||||||

我们将很快提供local SDK和subscribe service API服务。

|

|

||||||

|

|

||||||

通过local sdk,用户可以无需客户端进行订阅数据同步,并在本地通过python api将数据集成至任何系统,**特别适合各类RAG项目**(欢迎合作,邮件联系 35252986@qq.com)!

|

|

||||||

|

|

||||||

而subscribe service将使用户可以将订阅数据查询和推送服务嫁接到自己的微信公众号、微信客服、网站以及各类GPTs bot平台上(我们也会发布各平台的插件)。

|

|

||||||

|

|

||||||

### wiseflow架构图

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Citation

|

# Citation

|

||||||

|

|

||||||

如果您在相关工作中参考或引用了本项目的部分或全部,请注明如下信息:

|

如果您在相关工作中参考或引用了本项目的部分或全部,请注明如下信息:

|

||||||

|

|||||||

@ -8,3 +8,4 @@ chardet

|

|||||||

pocketbase

|

pocketbase

|

||||||

pydantic

|

pydantic

|

||||||

uvicorn

|

uvicorn

|

||||||

|

json_repair==0.*

|

||||||

@ -14,6 +14,7 @@ from requests.compat import urljoin

|

|||||||

import chardet

|

import chardet

|

||||||

from utils.general_utils import extract_and_convert_dates

|

from utils.general_utils import extract_and_convert_dates

|

||||||

import asyncio

|

import asyncio

|

||||||

|

import json_repair

|

||||||

|

|

||||||

|

|

||||||

model = os.environ.get('HTML_PARSE_MODEL', 'gpt-3.5-turbo')

|

model = os.environ.get('HTML_PARSE_MODEL', 'gpt-3.5-turbo')

|

||||||

@ -39,47 +40,18 @@ def text_from_soup(soup: BeautifulSoup) -> str:

|

|||||||

return text.strip()

|

return text.strip()

|

||||||

|

|

||||||

|

|

||||||

def parse_html_content(out: str) -> dict:

|

sys_info = '''Your role is to function as an HTML parser, tasked with analyzing a segment of HTML code. Extract the following metadata from the given HTML snippet: the document's title, summary or abstract, main content, and the publication date. Ensure that your response adheres to the JSON format outlined below, encapsulating the extracted information accurately:

|

||||||

dct = {'title': '', 'abstract': '', 'content': '', 'publish_time': ''}

|

|

||||||

if '"""' in out:

|

|

||||||

semaget = out.split('"""')

|

|

||||||

if len(semaget) > 1:

|

|

||||||

result = semaget[1].strip()

|

|

||||||

else:

|

|

||||||

result = semaget[0].strip()

|

|

||||||

else:

|

|

||||||

result = out.strip()

|

|

||||||

|

|

||||||

while result.endswith('"'):

|

```json

|

||||||

result = result[:-1]

|

{

|

||||||

result = result.strip()

|

"title": "The Document's Title",

|

||||||

|

"abstract": "A concise overview or summary of the content",

|

||||||

|

"content": "The primary textual content of the article",

|

||||||

|

"publish_date": "The publication date in YYYY-MM-DD format"

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

dict_strs = result.split('||')

|

Please structure your output precisely as demonstrated, with each field populated correspondingly to the details found within the HTML code.

|

||||||

if not dict_strs:

|

|

||||||

dict_strs = result.split('|||')

|

|

||||||

if not dict_strs:

|

|

||||||

return dct

|

|

||||||

if len(dict_strs) == 3:

|

|

||||||

dct['title'] = dict_strs[0].strip()

|

|

||||||

dct['content'] = dict_strs[1].strip()

|

|

||||||

elif len(dict_strs) == 4:

|

|

||||||

dct['title'] = dict_strs[0].strip()

|

|

||||||

dct['content'] = dict_strs[2].strip()

|

|

||||||

dct['abstract'] = dict_strs[1].strip()

|

|

||||||

else:

|

|

||||||

return dct

|

|

||||||

date_str = extract_and_convert_dates(dict_strs[-1])

|

|

||||||

if date_str:

|

|

||||||

dct['publish_time'] = date_str

|

|

||||||

else:

|

|

||||||

dct['publish_time'] = datetime.strftime(datetime.today(), "%Y%m%d")

|

|

||||||

return dct

|

|

||||||

|

|

||||||

|

|

||||||

sys_info = '''As an HTML parser, you'll receive a block of HTML code. Your task is to extract its title, summary, content, and publication date, with the date formatted as YYYY-MM-DD. Return the results in the following format:

|

|

||||||

"""

|

|

||||||

Title||Summary||Content||Release Date YYYY-MM-DD

|

|

||||||

"""

|

|

||||||

'''

|

'''

|

||||||

|

|

||||||

|

|

||||||

@ -121,17 +93,25 @@ async def llm_crawler(url: str, logger) -> (int, dict):

|

|||||||

{"role": "user", "content": html_text}

|

{"role": "user", "content": html_text}

|

||||||

]

|

]

|

||||||

llm_output = openai_llm(messages, model=model, logger=logger)

|

llm_output = openai_llm(messages, model=model, logger=logger)

|

||||||

try:

|

decoded_object = json_repair.repair_json(llm_output, return_objects=True)

|

||||||

info = parse_html_content(llm_output)

|

logger.debug(f"decoded_object: {decoded_object}")

|

||||||

except:

|

if not isinstance(decoded_object, dict):

|

||||||

msg = f"can not parse {llm_output}"

|

logger.debug("failed to parse")

|

||||||

logger.debug(msg)

|

|

||||||

return 0, {}

|

return 0, {}

|

||||||

|

|

||||||

if len(info['title']) < 4 or len(info['content']) < 24:

|

for key in ['title', 'abstract', 'content']:

|

||||||

logger.debug(f"{info} not valid")

|

if key not in decoded_object:

|

||||||

|

logger.debug(f"{key} not in decoded_object")

|

||||||

return 0, {}

|

return 0, {}

|

||||||

|

|

||||||

|

info = {'title': decoded_object['title'], 'abstract': decoded_object['abstract'], 'content': decoded_object['content']}

|

||||||

|

date_str = decoded_object.get('publish_date', '')

|

||||||

|

|

||||||

|

if date_str:

|

||||||

|

info['publish_time'] = extract_and_convert_dates(date_str)

|

||||||

|

else:

|

||||||

|

info['publish_time'] = datetime.strftime(datetime.today(), "%Y%m%d")

|

||||||

|

|

||||||

info["url"] = url

|

info["url"] = url

|

||||||

# Extract the picture link, it will be empty if it cannot be extracted.

|

# Extract the picture link, it will be empty if it cannot be extracted.

|

||||||

image_links = []

|

image_links = []

|

||||||

|

|||||||

@ -39,7 +39,7 @@ async def simple_crawler(url: str, logger) -> (int, dict):

|

|||||||

try:

|

try:

|

||||||

result = extractor.extract(text)

|

result = extractor.extract(text)

|

||||||

except Exception as e:

|

except Exception as e:

|

||||||

logger.info(f"gne extracct error: {e}")

|

logger.info(f"gne extract error: {e}")

|

||||||

return 0, {}

|

return 0, {}

|

||||||

|

|

||||||

if not result:

|

if not result:

|

||||||

|

|||||||

Loading…

Reference in New Issue

Block a user